Descriptions

The SOCD dataset is the first dataset that can be used to evaluate object-level change detection. The SOCD dataset comprises 15,000 perspective image pairs and object-level change labels synthesized by CARLA simulator [1].

Images

The SOCD dataset contains 15,000 perspective image pairs rendered from cameras in city-like environments of the CARLA simulator. The field of view of the images is 90-degree, and the image size is 1080 × 1080.

Labels

In addition to pixel-level (semantic change mask) and object-level (instance mask) change labels, semantic masks for entire scenes, depth images, and correspondences between objects in image pairs are available.

There are four object categories:

- Buildings,

- Cars,

- Poles,

- traffic signs and lights.

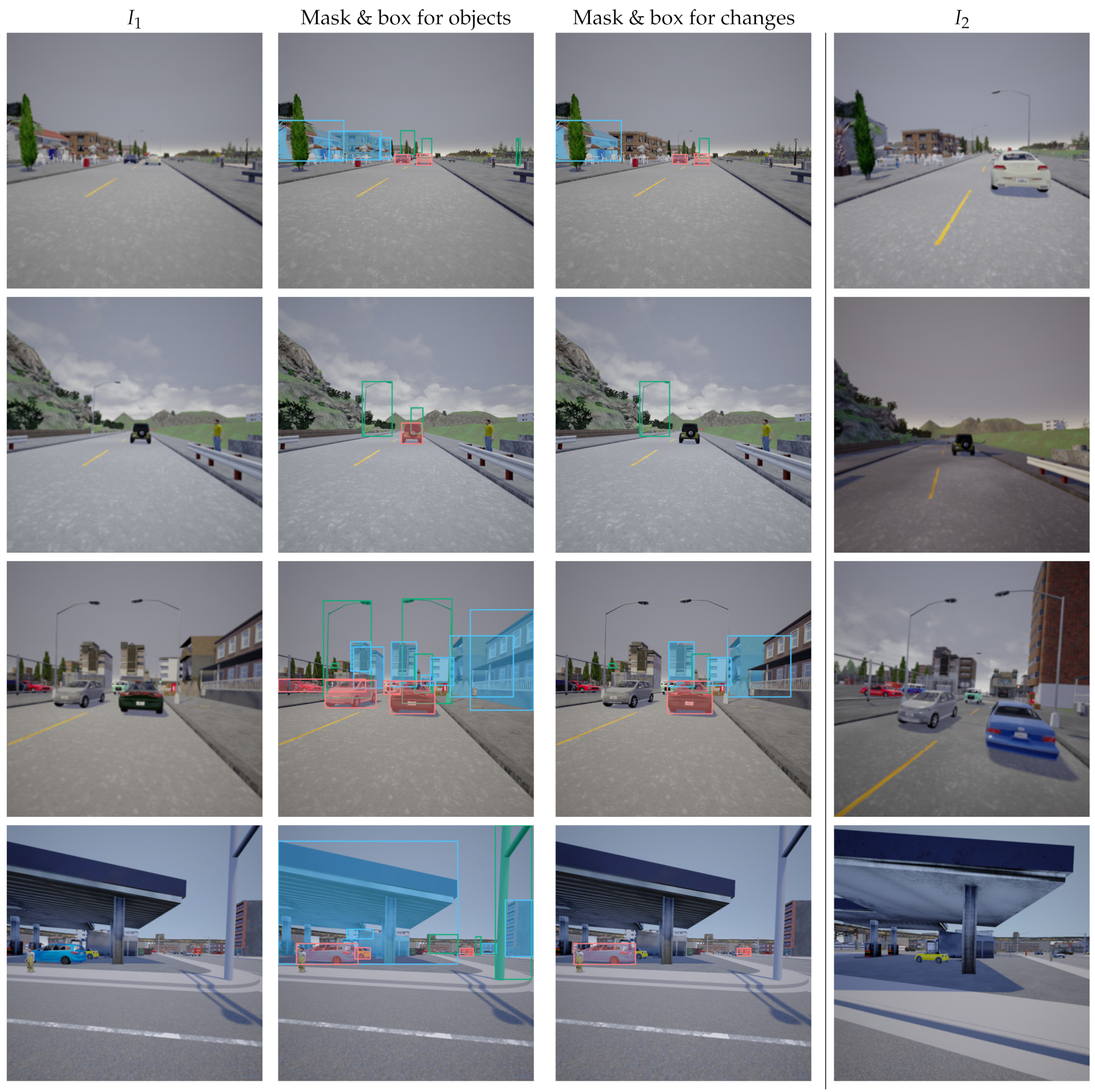

The figure below shows examples of the instance mask and bounding box for the objects/changes. The light blue is buildings, pink is cars, and green is poles. Please see this repository if you would like to know more about the label format.

Viewpoint differences

We rendered the image pairs with varying viewpoint differences to investigate the robustness of the change detection method to viewpoint differences. Specifically, the SOCD dataset has four categories {S1, S2, S3, S4} with varying yaw angle differences. In S1, there is no difference in yaw angle. In S2, 3, 4, the difference in yaw angle is uniformly sampled within the ranges of [0, 10], [10, 20], and [20, 30].

The figure below shows image pairs from the dataset with viewpoint differences. For more details, please see our paper.

Directory Structure

│

├── Town01/ # RGB images

├── Town02/ # RGB images

├── Town03/ # RGB images

├── chmasks/ # binary change masks

│ ├── Town01/

│ ├── Town02/

│ └── Town03/

├── semmask/ # semantic masks

│ ├── Town01/

│ ├── Town02/

│ └── Town03/

└── labels/ # label files

├── train[1-4].json

├── val[1-4].json

└── test[1-4].json