Our proposed network robustly detects

We developed a robust object-level change detection method that could capture distinct scene changes in an image pair with viewpoint differences.

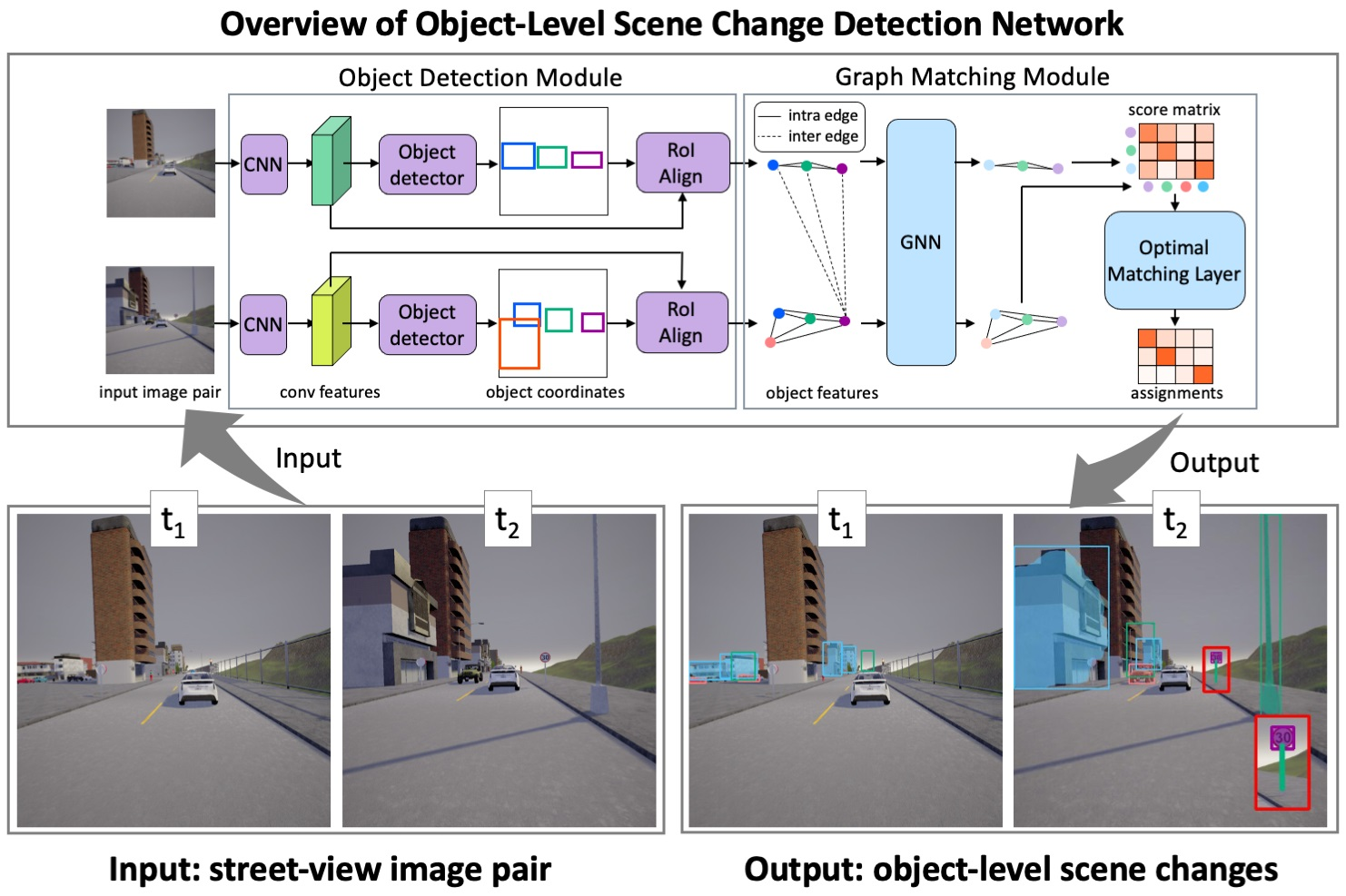

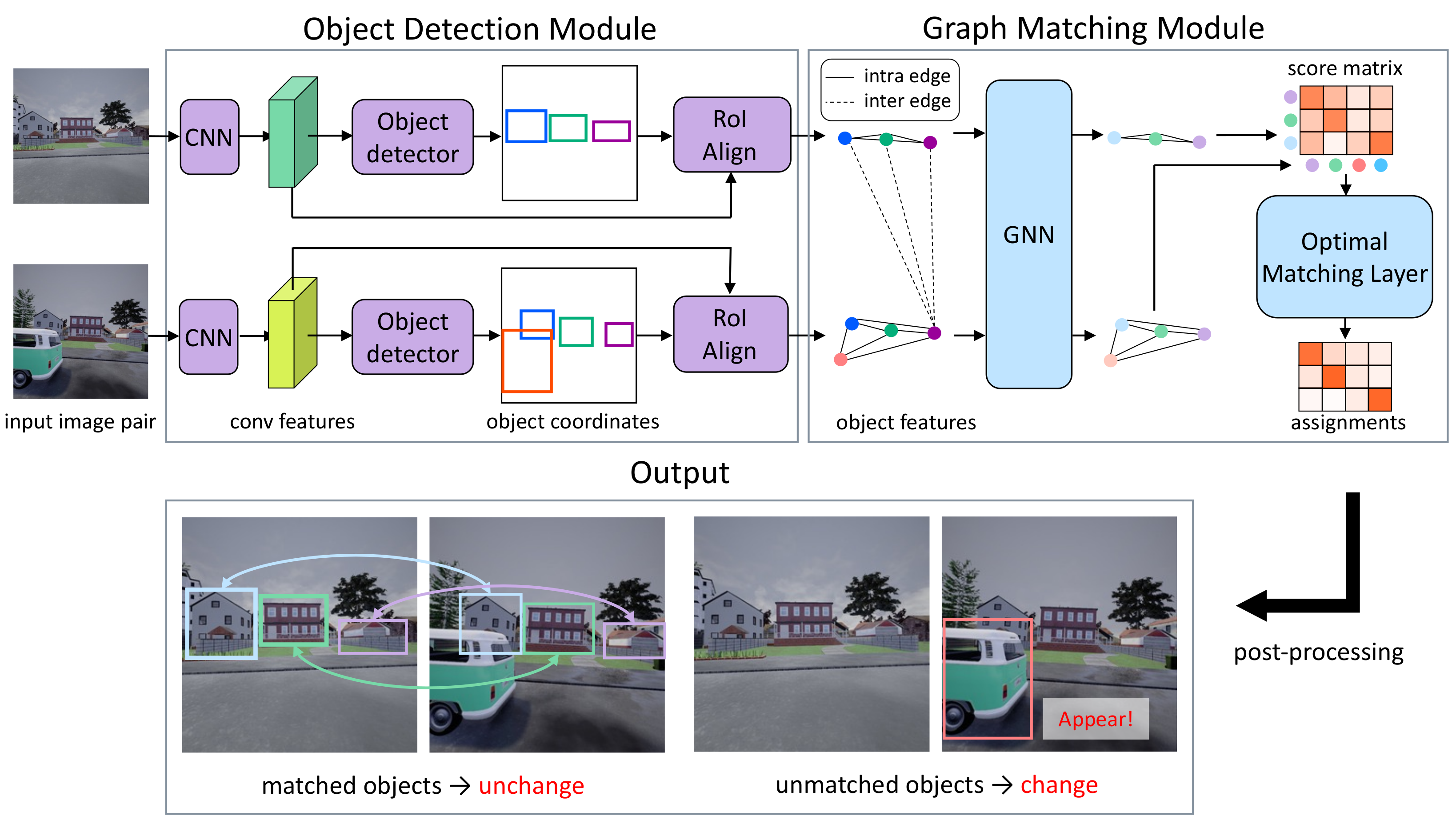

To achieve this, we designed a network that could detect object-level changes in an image pair. In contrast to previous studies, we considered the change detection task as a graph matching problem for two object graphs that were extracted from each image. By virtue of this, the proposed network more robustly detected object-level changes with viewpoint differences than existing pixel-level approaches. In addition, the network did not require pixel-level change annotations, which have been required in previous studies. Specifically, the proposed network extracted the objects in each image using an object detection module and then constructed correspondences between the objects using an object matching module. Finally, the network detected objects that appeared or disappeared in a scene using the correspondences that were obtained between the objects.

To verify the effectiveness of the proposed network, we created a synthetic dataset of images that contained object-level changes. In experiments on the created dataset, the proposed method improved the F1 score of conventional methods by more than 40%.

We proposed a novel deep neural network that can detect

To demonstrate the effectiveness of our proposed network, we conducted experiments using the SOCD dataset.

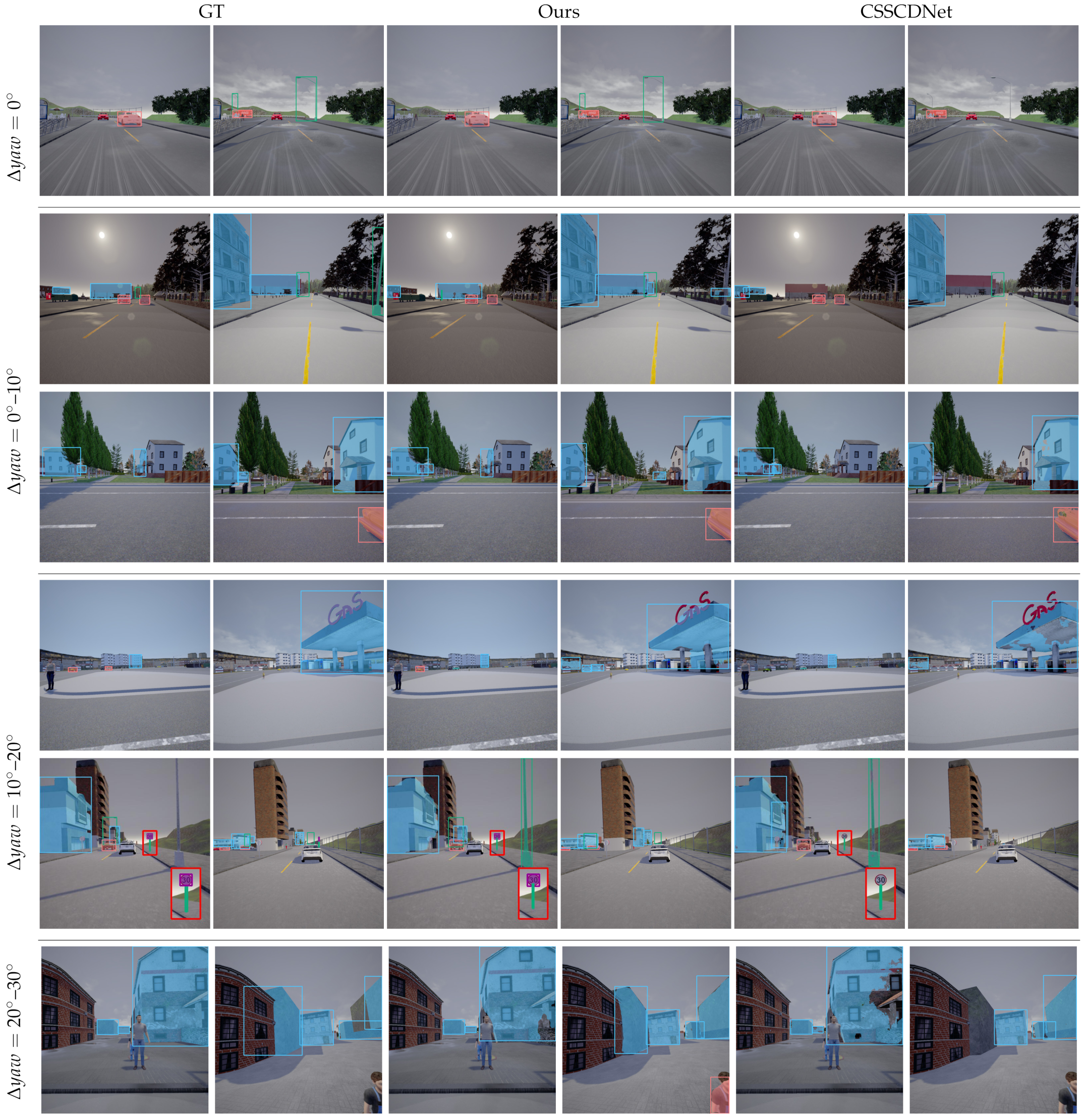

As shown in the figure below, our network successfully detected object-level changes, even when viewpoint differences existed between the images (from the 2nd row to the 6th row). The proposed method also successfully detected thin objects (the 1st row) and small objects (the 5th row). Furthermore, the change masks were more accurate than those in the existing methods, when the scale of the viewpoint difference was relatively large (the 4th, 5th, and 6th rows).

The table below presents the results of the object-level change detection methods using the SOCD dataset. When there were no viewpoint differences between the images, the baseline method with CSSCDNet [3] produced the best score. By contrast, when there were viewpoint differences between the images, the proposed method outperformed all baseline methods and improved the score by more than 40%.

| Viewpoint difference | ||||

|---|---|---|---|---|

| Method | Δyaw=0° | Δyaw=0-10° | Δyaw=10-20° | Δyaw=20-30° |

| ChangeNet [1] | 0.219 | 0.142 | 0.155 | 0.133 |

| CSSCDNet [2] (w/o correlation layer) | 0.453 | 0.234 | 0.241 | 0.205 |

| CSSCDNet [2] (w/ correlation layer) | 0.508 | 0.274 | 0.258 | 0.208 |

| Ours (w/o graph matching module) | 0.406 | 0.337 | 0.335 | 0.295 |

| Ours | 0.463 | 0.401 | 0.396 | 0.354 |

| Ours (w/ GT mask) | 0.852 | 0.650 | 0.652 | 0.546 |

@article{objcd,

author = {Doi, Kento and Hamaguchi, Ryuhei and Iwasawa, Yusuke and Onishi, Masaki and Matsuo, Yutaka and Sakurada, Ken},

title = {Detecting Object-Level Scene Changes in Images with Viewpoint Differences Using Graph Matching},

journal = {Remote Sensing},

volume = {14},

number = {17},

year = {2022},

}This work was partially supported by JSPS KAKENHI (grantnumber:20H04217).